Documentation

Technical information

This submission is about training a FeedForward Neural Network using automatically generated datasets on on a polynomial function.

The website was built with the following languages:

- HTML

- CSS

- JavaScript

- TensorFlow

Why did I use TensorFlow?

TensorFlow is currently one of the most important frameworks for programming neural networks, deep learning models and other machine learning algorithms. But what are Tensors? In its original meaning, a tensor describes the absolute value of so-called quaternions, complex numbers that extend the range of values of real numbers. It is also understood as a generalization of scalars, vectors and matrices. In TensorFlow tensors flow - through the so-called graph. The basic operation of is based on a graph. This denotes an abstract representation of the underlying mathematical problem in the form of a directed diagram. Placeholders in TF are used to store the input and output data, variables are flexible and can change their values during the runtime of the calculation. The most important application for variables in neural networks are the weight matrices of the neurons (weights) and bias vectors (biases), which are constantly adjusted to the data during training.

How the FFNN works

In a feedforward neural network are connections between nodes that do not form a cycle. The information flows in such a network always only in one direction. The information flow comes from the input nodes through the hidden nodes (if any) to the output nodes. There are neither cycles nor loops in such a network.

The input can be almost arbitrarily complex, but must always be numeric. Data types such as text or images must first be transformed into numeric data.

The output must also be numeric, but is usually less complex. Examples are a simple scalar such as network load or a binary coded category such as whether a customer buys a product or not.

The hidden layer contains the actual functionality of the neural network. The values in the input neurons (X1, ..., Xn) are multiplied by weights and passed on to neurons of the first hidden layer (h1, ..., hn). The model is later optimized by trying different weights. Each input neuron can project to each neuron of the first hidden layer.

Logical information

Combining TF and FFNN

My website is inspired by the TensorFlow tutorial "Making predictions from 2d data". This was technically adapted to the task. The FFNN works on my website as follows:

First, the parameters have to be determined. To do this, it is possible to adjust the Hidden Layers, Samples, Learn Rate, Epochs, Noise and the Optimizer. The Hidden Layers, Learn Rate, Epochs and Noise can be adjusted with a slider. The Samples and the Optimizer can be selected from the dropdown menu. Finally, training can be started by clicking on "Start".

At the start the program generates random data sets at the function y(x) = (x+0.8)*(x-0.2)*(x-0.3)*(x-0.6). With the help of these data sets a regression can be performed. To do this, a window with all the data pops up on the right side of the web page. After the training is finished, the result is displayed below.

Parameter Adjustments

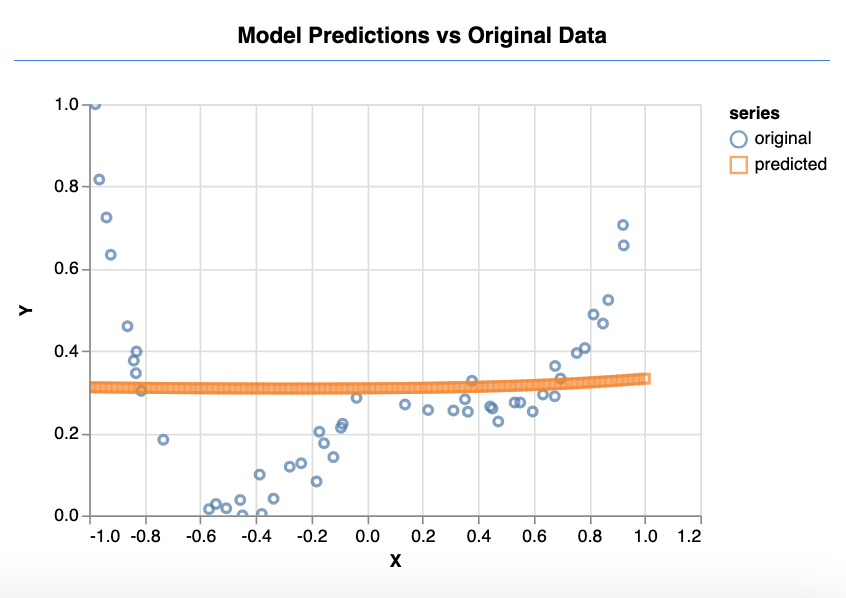

Under-Fitting

Hidden Layer: 1

Samples: 20

Learn Rate: 0.0001

Epochs: 1

Noise: 0.042

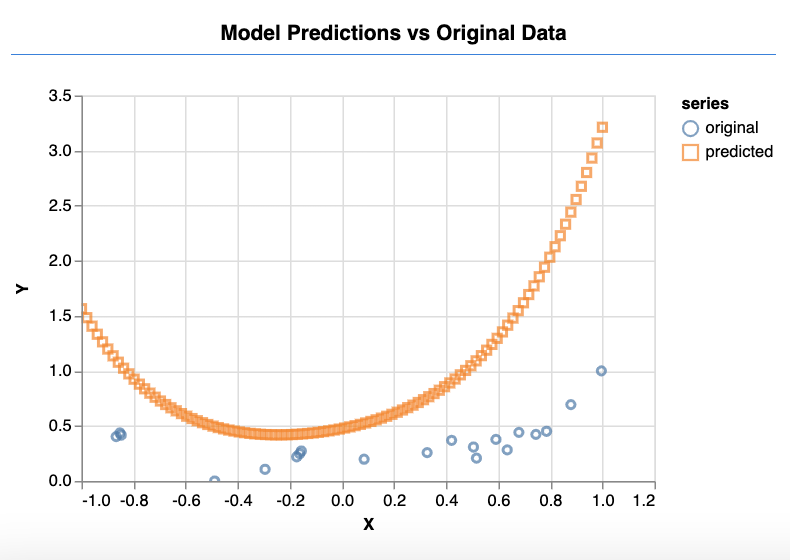

Over-Fitting

Hidden Layer: 100

Samples: 100

Learn Rate: 0.9999

Epochs: 100

Noise: 0.001

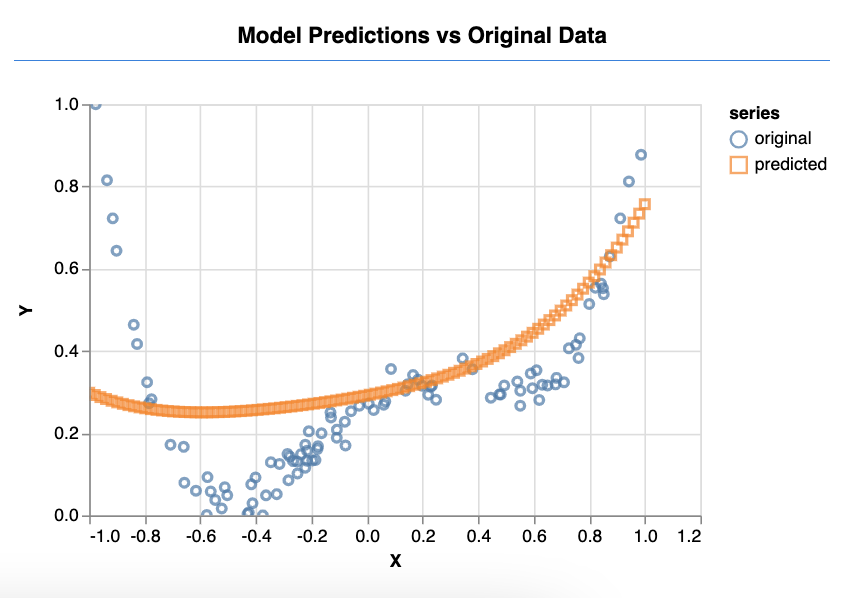

Best-Fit

Hidden Layer: 50

Samples: 50

Learn Rate: 0.5

Epochs: 50

Noise: 0.02